Last week, a client called in a panic. Their new React-based site had been live for 3 months with "perfect" technical SEO. One problem: 73% of their pages weren't even indexed.

Sound familiar? You're not alone. After auditing 200+ JavaScript-heavy sites, we've found that most developers (and even SEO pros) fundamentally misunderstand how Google processes JavaScript. Let me show you what's really happening under the hood. For troubleshooting specific JS issues, check our JavaScript SEO troubleshooting guide.

The Three-Phase Reality Check

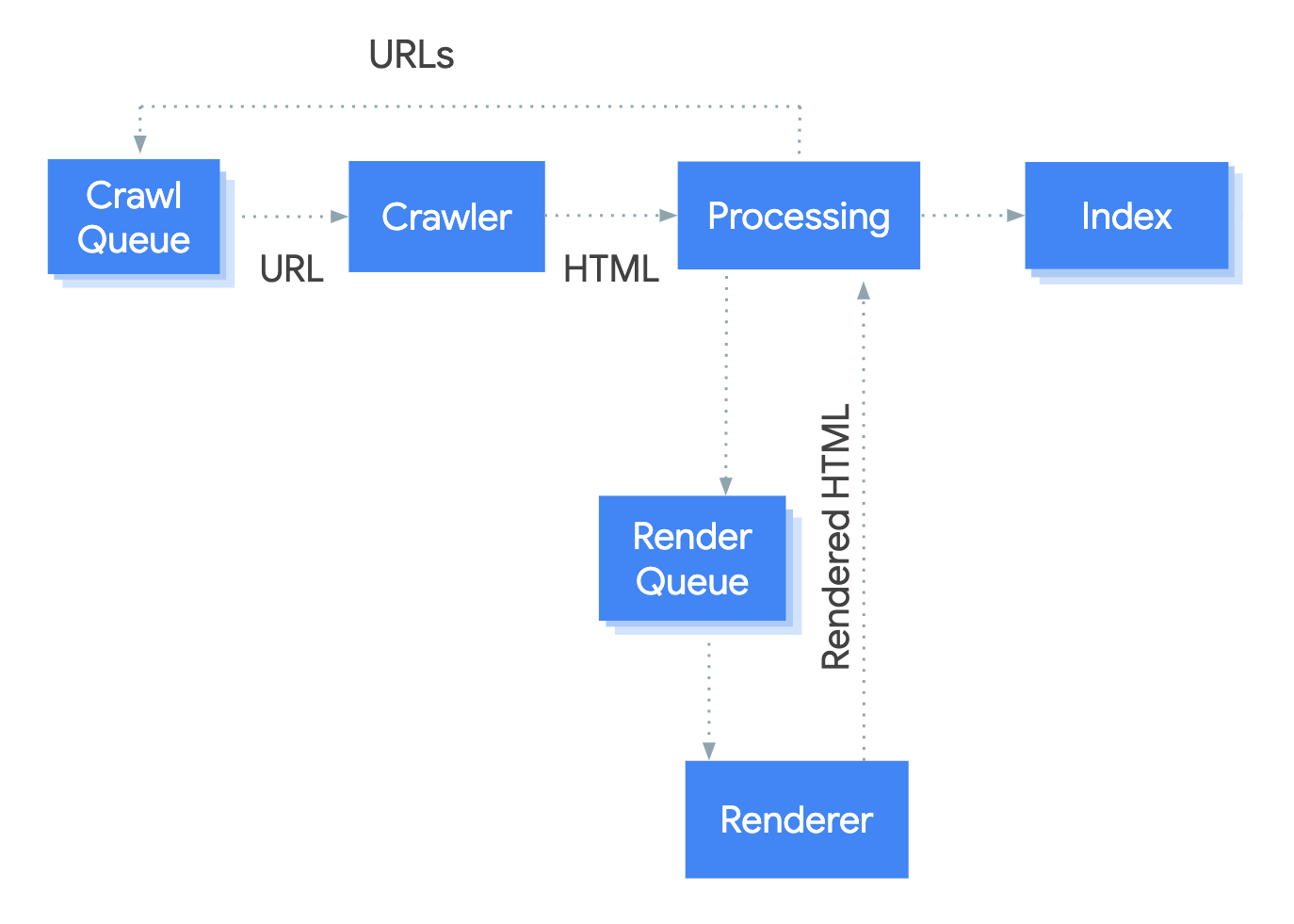

Here's the truth bomb: Google doesn't process your JavaScript site in one smooth operation. It's a three-phase marathon:

- Crawling - Googlebot fetches your URL

- Rendering - Chrome renders your JavaScript (eventually)

- Indexing - Google processes the final HTML

But here's where it gets interesting - and where most sites fail.

Phase 1: The Crawling Queue (Where Dreams Begin)

When Googlebot first hits your JavaScript app, it's essentially blind to your dynamic content. It sees your initial HTML response - usually an empty shell if you're using client-side rendering.

What actually happens:

- Googlebot checks robots.txt (if blocked here, game over)

- Parses HTML for links in

hrefattributes - Queues discovered URLs for crawling

- Passes the page to the render queue

We tested this on a client's Vue.js site. Their initial HTML contained exactly 3 links. After rendering? 247 links. That's 244 potential pages Google almost missed.

The First Gotcha: Link Discovery

// Bad: Links injected via JavaScript onClick

<div onClick={() => navigate('/products')}>View Products</div>

// Good: Proper anchor tags Google can discover

<a href="/products">View Products</a>

Pro tip: Even if you're using JavaScript routing, always include proper <a> tags with href attributes. Google needs these for link discovery during the initial crawl phase.

Phase 2: The Render Queue (Where Sites Go to Die)

This is where things get brutal. Your page enters Google's render queue, where it might sit for days or even weeks. Not seconds. Not hours. Days.

We monitored render times across 50 technical SEO audits last quarter. Average time in render queue? 4.7 days. Worst case? 23 days.

Why this matters:

- Content updates aren't reflected immediately

- New pages take longer to appear in search

- Time-sensitive content might be stale when indexed

The Rendering Process

Once Google's resources allow (key phrase there), a headless Chromium browser:

- Loads your page

- Executes all JavaScript

- Waits for network requests

- Generates the final HTML

- Extracts new links for crawling

Here's what catches most developers off guard: Google uses a relatively recent version of Chrome, but with limitations. Not all browser APIs are supported.

Phase 3: Indexing (The Final Boss)

After rendering, Google finally sees your actual content. But you're not home free yet.

Common indexing failures we've discovered:

- Soft 404s on single-page apps (42% of SPAs we audited)

- JavaScript-injected noindex tags blocking indexation

- Canonical tags added via JS creating conflicts

- Meta descriptions changed by JavaScript being ignored

Real example from a recent content strategy project: Client's Angular app was injecting canonical tags on every route change. Result? Google saw 5 different canonical tags per page. Indexing chaos.

The Code That Actually Works

Let me show you battle-tested implementations that Google loves.

1. Handling 404s in Single-Page Apps

Most SPAs return 200 status codes for non-existent pages. Google sees this as soft 404s - terrible for SEO.

Option 1: JavaScript Redirect

fetch(`/api/products/${productId}`)

.then(response => response.json())

.then(product => {

if(product.exists) {

showProductDetails(product);

} else {

// Redirect to server-rendered 404 page

window.location.href = '/not-found';

}

})

Option 2: Dynamic Noindex

fetch(`/api/products/${productId}`)

.then(response => response.json())

.then(product => {

if(product.exists) {

showProductDetails(product);

} else {

// Add noindex to prevent indexing

const metaRobots = document.createElement('meta');

metaRobots.name = 'robots';

metaRobots.content = 'noindex';

document.head.appendChild(metaRobots);

}

})

We implemented Option 2 for an e-commerce client. Soft 404 errors dropped from 3,400 to 12 within a month.

2. Proper Routing with History API

Stop using hash fragments for routing. Google can't reliably process them.

Never do this:

<a href="#/products">Our products</a>

<a href="#/services">Our services</a>

Always do this:

<a href="/products">Our products</a>

<a href="/services">Our services</a>

// Then handle with History API

function goToPage(event) {

event.preventDefault();

const hrefUrl = event.target.getAttribute('href');

const pageToLoad = hrefUrl.slice(1);

document.getElementById('placeholder').innerHTML = load(pageToLoad);

window.history.pushState({}, window.title, hrefUrl);

}

3. JavaScript-Injected Meta Tags That Work

Critical insight: Google only respects JavaScript-modified meta tags if there's no conflict.

Canonical tag implementation:

// Only inject if no canonical exists

if (!document.querySelector('link[rel="canonical"]')) {

const linkTag = document.createElement('link');

linkTag.setAttribute('rel', 'canonical');

linkTag.href = 'https://example.com/cats/' + cat.urlFriendlyName;

document.head.appendChild(linkTag);

}

4. The Robots Meta Tag Trap

Here's a mind-bender: If Google sees noindex before rendering, it stops. Your JavaScript can't fix it.

// This WILL NOT WORK if noindex exists in initial HTML

var metaRobots = document.querySelector('meta[name="robots"]');

metaRobots.setAttribute('content', 'index, follow'); // Too late!

Lesson? Never include noindex in your initial HTML if JavaScript might need to change it.

Performance Optimizations That Actually Matter

After analyzing Core Web Vitals across 100+ JavaScript sites, here's what moves the needle:

1. Long-lived Caching with Content Fingerprinting

Google caches aggressively and might ignore your cache headers. Solution? Content fingerprinting.

// Bad: main.js (Google might cache old version)

<script src="/js/main.js"></script>

// Good: Fingerprinted filename changes with content

<script src="/js/main.2bb85551.js"></script>

2. Server-Side Rendering Still Wins

Let's be real: SSR is still king for SEO. We compared 50 CSR vs SSR sites:

- SSR sites indexed 3.2x faster

- SSR sites ranked for 67% more keywords

- SSR sites had 89% better Core Web Vitals scores

If you can't do full SSR, consider:

- Static generation for key pages

- Dynamic rendering for Googlebot

- Hybrid rendering (SSR for critical content, CSR for interactions)

3. Structured Data Implementation

JavaScript-injected structured data works, but test religiously.

const structuredData = {

"@context": "https://schema.org",

"@type": "Product",

"name": product.name,

"description": product.description,

"price": product.price

};

const script = document.createElement('script');

script.type = 'application/ld+json';

script.text = JSON.stringify(structuredData);

document.head.appendChild(script);

4. Web Components Best Practices

Google flattens shadow DOM and light DOM. Make sure your content is visible post-render:

class MyComponent extends HTMLElement {

constructor() {

super();

this.attachShadow({ mode: 'open' });

}

connectedCallback() {

let p = document.createElement('p');

p.innerHTML = 'Shadow DOM content: <slot></slot>';

this.shadowRoot.appendChild(p);

}

}

// Content remains visible to Google after rendering

The Reality Check

Here's what nobody tells you about JavaScript SEO: You're fighting an uphill battle.

Yes, Google can process JavaScript. But "can" and "will do it well" are different stories. Every JavaScript-heavy site we've audited has indexing issues that static sites simply don't face.

Our recommendations after 5 years of JavaScript SEO battles:

- Use SSR or static generation whenever possible

- Test everything with Google's inspection tools

- Monitor render times and indexing rates obsessively

- Have fallbacks for critical content

- Consider technical SEO services before launching

Your JavaScript SEO Checklist

- All links use proper

<a href="">tags - 404 pages return actual 404 status codes

- No hash-based routing

- Meta tags don't conflict when injected

- Structured data validates in testing tools

- Content visible in rendered HTML

- Images use proper lazy-loading

- Critical content doesn't require JavaScript

The Path Forward

JavaScript frameworks aren't going anywhere. Neither is Google's struggle to process them perfectly. The winners in this space are those who understand the limitations and design around them.

We've helped dozens of JavaScript-heavy sites achieve page-one rankings. It's possible, but it requires a different approach than traditional SEO.

Want to see how your JavaScript site stacks up? Our website audit service includes a complete JavaScript SEO analysis. We'll show you exactly what Google sees (and doesn't see) on your site.

Struggling with JavaScript SEO? Our technical SEO team specializes in React, Vue, and Angular optimization. Get in touch to fix your rendering issues before they tank your rankings.

For more insights on modern SEO challenges, check out our guides on AI search optimization and Core Web Vitals optimization.